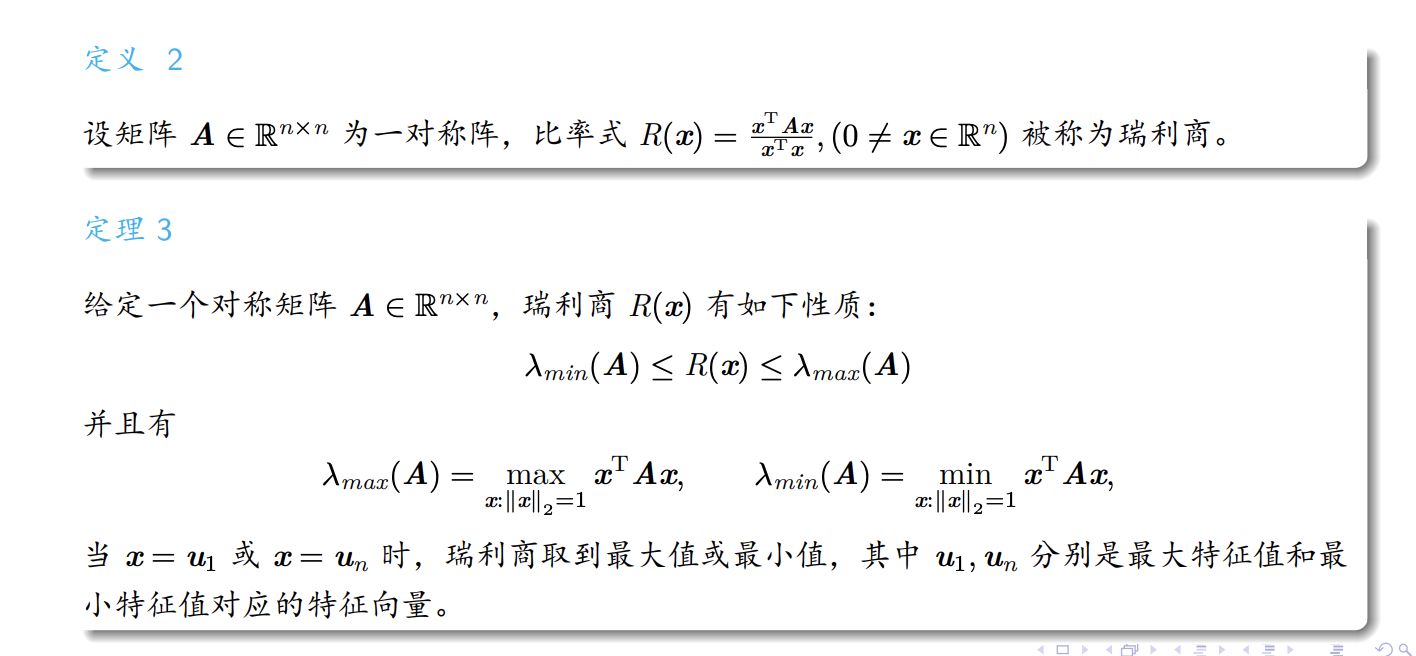

Sigmoid 函数的什么性质 对神经网络有用?

1.sigmoid函数可以把实数域光滑的映射到[0,1]空间.

所以sigmoid函数可以满足认知元对输出进行二分结果表示的要求.

- Sigmoid functions have much of the same qualitative behaviour as perceptrons. Sigmoid function can map real number field to [0,1] space smoothly, which can satisfy the requirement of perceptrons to produce binary output.

- The smoothness of sigmoid function is helpful to the adjustments of nerual networks. When we use perceptrons, if we adjust the weight and bias of a certain perceptron, we may make the outputs of the whole network change utterly. By contrast, Sigmoid function will have little impact on the whole network if small adjustment is made. It is monotonically increasing in shape, continuous derivable and simple in derivative form, which is helpful to predict the change of the output.

2.利用sigmoid函数的smoothness的性质

当我们对某一neuron的weight和bias进行调整的时候,这一调整对最终结果的影响较小. The smoothness of σσ means that small changes ΔwjΔwj in the weights and ΔbΔb in the bias will produce a small change ΔoutputΔoutput in the output from the neuron.So while sigmoid neurons have much of the same qualitative behaviour as perceptrons, they make it much easier to figure out how changing the weights and biases will change the output.

Sigmoid function is monotonically increasing in shape, continuous derivable and simple in derivative form. It is a more suitable function

sigmoid函数从形状上来看是单调增的,连续可导,导数形式简单,是一个比较合适的函数.

神经网络和传统compute的区别在于automatically 调整weight 和bias去获得结果.

当我们使用perceptrons的时候,如果对某一处的weight和bias 进行了调整,我们有时可能会使整个网络的整体结果进行了我们并不想要出现的变化.

When we use perceptrons, if we adjust the weight and bias of a certain place, sometimes we may make the overall results of the whole network change which we do not want

总结: